Meet Coop V.0

Published

In July we announced Coop and Osprey as our first free, open source trust and safety tools. We open sourced Osprey late last year and rolled out releasing Coop as open source as well.

Whether an established platform safety team or a startup managing user and AI-generated content, teams face a fundamental challenge: how does one process millions of pieces of flagged content efficiently while protecting reviewer wellbeing, ensuring consistent policy enforcement, and maintaining audit trails for compliance? Coop V.0 addresses these challenges by providing human-centered review and automation infrastructure that combines smart queue orchestration, context-rich review interfaces, and specialized child safety workflows by design – all with resilience and wellness features to keep reviewers safe from harmful content exposure.

ROOST is on a mission to make safety tooling available to platforms and developers of all sizes. Many platforms and developers handle content review manually and with tools like spreadsheets, because the available review consoles are too expensive, cannot be customized, or have prescriptive requirements, like a specific cloud provider. Coop works whether you're a startup with 1,000 daily reviews, or an established platform with millions of posts, running on your infrastructure with full control over data retention and privacy.

Under the Hood: How Coop Works

Coop sits between your platform and your reviewers. When content is uploaded on your platform, it is sent to Coop through a REST API call. All relevant data (content, user, context) is included. User defined rules in the Coop rules engine determine if an automated action can be applied, otherwise it is routed for manual reviews via configurable routing rules. The signal abstraction layer allows external AI/ML systems or heuristic rules to be written to score content. Special rules can be written to handle user flagged or appealed items. Reviewers get a context-rich case to review and they render a verdict. Human verdicts and automated verdicts are exposed through a webhook that can be then actioned with your enforcement system.

The system runs on your infrastructure with your choice of data warehouse. You can configure ClickHouse or Snowflake out-of-the-box for analytics via environment variables or connect it to your system of choice. Docker Compose handles deployment whether you're running locally or in production.

From Detection to Decision

Here's a typical workflow. Your platform submits data via REST API:

POST /actions

Content-Type: application/json

x-api-key: your-api-key

{

"actionId": "user_reported_content",

"itemId": "post_abc123",

"itemTypeId": "post",

"content": {

"text": "This is spam content",

"author_id": "user_456"

},

"policyIds": ["spam_policy"],

"actorId": "user_reporter_789"

}Coop's automated action rules evaluate this against configured policies and aggregate signals from any ML classifiers you've integrated (OpenAI Moderation, Google Content Safety, your custom models). The rules can be configured based on thresholds to take an auto-action or route it for human review.

Reviewers see the content with complete context as you define it: this can include user information, signal scores, which rules triggered, or related reports. They make a decision, and Coop executes it by calling your platform's API:

{

"item": {"id": "abc123", "typeId": "post"},

"action": {"id": "delete_content"},

"policies": [{"id": "spam_policy", "name": "Spam"}],

"rules": [{"id": "ml_spam_detection"}]

}Your platform receives this webhook and performs the actual enforcement. The architecture is built around a few key components.

Item Types define your content schema (posts, comments, profiles, whatever your platform has).

Actions map to your API endpoints. Policies categorize harm and tie to actions for tracking and compliance.

Review queues organize work with configurable routing rules.

Signals are ML classifier outputs that feed into routing decisions and reviewer interfaces.

The Review Experience

Reviewers log into Coop's web interface and see their assigned queues with counts, priorities, and SLA status. They select a queue and start reviewing. For each item, they see the content itself rendered for the item type, user submission history and reputation scores, signal scores from configured classifiers, and related reports or appeals. Available actions are determined by the policies that apply.

Coop provides research-backed reviewer wellness features at both the organization level and individual level. This includes grayscale images, muted videos by default, and a sliding blurring scale. Organizations configure baseline protections, and individual reviewers can adjust settings based on their preferences.

Child Safety Technology for All

While we were preparing Coop for a V.0, open source release, we integrated new capabilities specifically designed for child safety. We view child safety as the highest-stakes technical challenge with the broadest organizational need.

Hash Matching For All

Meta's hasher-matcher-actioner (HMA) enables organizations to detect known, harmful content through hash matching. In June 2025, the Technology Coalition transitioned HMA office hours to ROOST so that more organizations could get onboarded to hash matching, troubleshoot live, and meet other adopters. Since then, the HMA community has welcomed new platforms adopting HMA, investigated issues that impact multiple adopters, and fleshed out the documentation needed to make HMA a sustainable safety project.

Coop V.0 integrates with HMA, providing a configurable way to match known CSAM, non-consensual intimate imagery (NCII), terrorist and violent extremist content (TVEC), and any internal hash banks you maintain. Setup requires API credentials from supported hash databases like NCMEC and Tech Against Terrorism.

Production deployments of HMA include a HMA_SERVICE_URL for the HMA URL while locally, it runs on port 5000 (NOTE: for Apple users, this is the default port for AirDrop. You may want to choose a different port or turn AirDrop off.) HMA runs as a Docker container alongside Coop's other services:

# docker-compose.yaml

hma:

build:

context: ./hma

dockerfile: Dockerfile

environment:

- POSTGRES_PASSWORD=postgres123

- POSTGRES_USER=postgres

- POSTGRES_DB=postgres

- POSTGRES_HOST=postgres

ports:

- '5000:5000'If you're using HMA with third party signal banks, the setup requires API credentials from supported hash databases like NCMEC and Tech Against Terrorism.

Free API-Powered Child Safety Tools

We wanted platforms of any size to have access to cutting edge child safety detection from day one. Coop V.0 includes pre-built integrations with leading child safety classification APIs, providing coverage across all content modalities: images, video, and text.

Google's Content Safety API provides AI-powered detection for detecting previously unseen CSAM in images and video. While Coop V.0 supports image classification, we'll be adding video and image embedding support in future releases. The API returns priority scores indicating likelihood that content contains CSAM. Higher scores can be configured to route content to higher-priority queues, ensuring reviewers tackle the highest-risk material first. Paired with HMA, this provides comprehensive CSAM detection covering both known and novel content. The API is provided at no cost through Google's child safety toolkit program (subject to approval).

OpenAI's Moderation API provides classification across multiple harm categories including sexual content involving minors, violence, self-harm, and harassment. Text-based grooming and solicitation often precedes image or video abuse. By integrating text classification alongside image and video detection, platforms can identify concerning behavior earlier in the chain and intervene before visual abuse material appears. The API is available free of charge with rate limits per account and requires an OpenAI developer account.

Organizations can use the Content Safety API and Moderation API individually or in combination with other signals.

Running Coop: Deploy Anywhere

Coop runs on your infrastructure with full control over data retention and privacy. It can be deployed via Docker Compose for both local development and production environments.

Coop supports ClickHouse and Snowflake, configured via environment variables. Switching between data warehouses requires zero code changes. For ClickHouse deployments, we've optimized schemas for trust and safety workloads with MergeTree engines, date partitioning, and query-aligned sort keys.

If you use AWS, we've included reference deployment code that documents our production-tested architecture. Organizations on other cloud providers or running on-premises can use the Docker Compose setup without modification.

The Journey to Open Source

The journey to Coop being released as open source is a bit unique. We acquired the codebase of Cove, which was a hosted moderation product built by a startup. The codebase was well-architected with configurable queue routing, a comprehensive review interface, and a flexible rules engine. However, it was built as a managed service with dependencies that prevented open source distribution. We've spent the past several months working through the codebase to get it into a releasable "V.0" state, which is what we're making available today.

Preparing Coop for open source release meant fundamentally rethinking how the system operates. The original Snowflake-only analytics pipeline gave way to a data warehouse abstraction layer that supports ClickHouse and PostgreSQL alongside Snowflake, giving organizations genuine deployment flexibility. Eliminating AWS dependencies required replacing managed services with Docker Compose configurations and rebuilding the entire CI/CD pipeline for GitHub's hosted runners, removing any requirement for cloud infrastructure.

Making a proprietary SaaS codebase ready for public distribution required careful sanitization. The original code was designed for multi–user data and schema models, which we replaced with single-user self-hosted infrastructure.. Perhaps most critically, the minimal documentation that served our internal team had to evolve into comprehensive guides that would let someone unfamiliar with the codebase get Coop running locally, understand the architecture, and contribute improvements back to the project.

What's Included in V.0

This V.0 release includes the core review capabilities alongside specialized child safety workflow functionality:

Context-rich review console for content flagged by ML models, user reports, or detection rules

Flexible signal abstraction for integrating classifiers

Queue routing and orchestration based on signals, priority, and policy

Reviewer wellness capabilities built-in (per organization/individual)

Automated and manual enforcement workflows with complete audit trails

Appeals handling with dedicated review workflows

Compatible with OSS storage (Postgres, Scylla 5.2, Clickhouse) but other storage can be plugged in

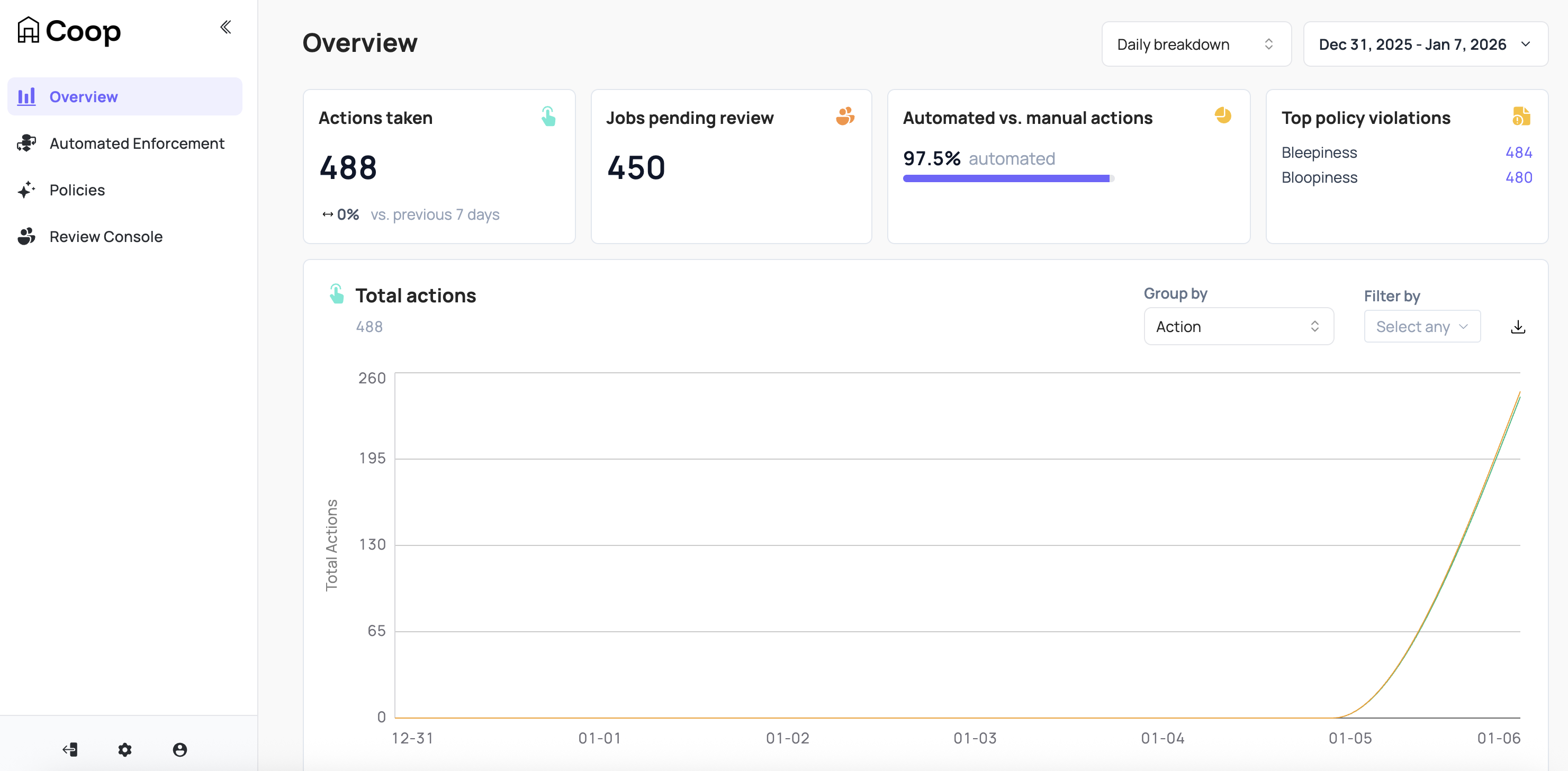

Insightful dashboards for tracking performance and feedback to improve rules and policies

HMA integration for hash matching (CSAM, TVEC, NCII, internal hash banks, etc.)

Comprehensive child safety tools for known CSAM, novel CSAM detection, and reporting

What's Next

As we've shared in our roadmap, this initial V.0 release of Coop is focused on specialized child safety workflow functionality alongside the core review capabilities. We'll be improving Coop by building systematic quality into review workflows and creating feedback loops between review decisions and investigation systems. To do so, we'll start by tackling the following features and improvements:

In-tool Quality Assurance (QA) for reviewer decisions / Golden Sets

Enhanced NCMEC reporting designed for actionable reports

Config-based integration with open safety models from the ROOST Model Community

Expanded search

AI assistance

INHOPE Universal Schema support

Long Term Vision

ROOST's mission is to make open source the default for trust and safety infrastructure. Coop and Osprey are the two flagship projects in this ecosystem, built around the DIRE framework (Detection, Investigation, Review, Enforcement): Osprey handles Detection and Investigation by tracking user behavior patterns and investigating coordinated abuse at scale. Coop manages Review and Enforcement through human oversight, queue management, and policy-based decisions. Most platforms start with Coop for content moderation, then adopt Osprey as they mature and need to combat ban evasion and behavioral threats. Right now they operate independently, but we're building toward interoperability where human reviewers in Coop see what Osprey flagged, make decisions, and those decisions inform Osprey's future behavior, creating a complete trust and safety system.

The beginning of the open source Coop project

Beyond the V.0 code release, today also marks the beginning of the true open source project of Coop; planning, discussions, issue tracking, project management, pull requests, code review—all of the work beyond the code itself—will happen transparently and in the open from here on out. And most importantly, you can get involved, too!

If you're an engineer at an organization working on trust and safety tools, a trust and safety professional looking to improve the state of tools for everyone, or an open source developer looking for an interesting project to jump into, we're more than happy for you to join our community and project:

Even if you're not ready to contribute code or don't think of yourself as technical, we want to hear from you. We've built this for everyone, and your feedback makes us better and keeps us accountable. We are building toward a future where open source is the industry standard for safety tech. Coop V.0 is another step toward making the tools platforms need to protect their communities accessible to everyone. We're looking forward to seeing what you build with it.